Get Started with Web App performance

In a web application, performance might refer to

— Loading performance: How quickly the page loads

— Rendering performance: How quickly the page elements respond to user interactions

I always recommend this Google web fundamentals guide as a good place to start with detailed explanations on the different metrics. But, once we understand what the different metrics and the optimisation techniques are, how do we actually go about the process? Which is what I’ll attempt to guide you through here.

Performance is about the cycle of Optimise -> Measure -> Optimise. Here lies a sample application (https://girlswhojs.herokuapp.com/) with a huge hero banner image and a map loaded on another route. We’re going to attempt to improve the perceived performance, which involves optimising the critical rendering path to prioritise the display of relevant content to the user, by adopting a few techniques, some of which are on the client and some on the server.

Some of the useful tools are:

- WebPageTest

- GTMetrix

- Google PageSpeed

- Pingdom

- YSlow

- Google DevTools

How?

We’re going to be using the webpagetest tool to understand our sample app’s performance, as we proceed through each step. I’ve got a simple Heroku instance setup with Github deployment pipeline enabled, so that as I make the changes in each step, I deploy them to the instance and run Webpagetest on it. If you’re unfamiliar with interpreting a waterfall chart, this should serve as a good place to start.

Step — 1: Compression (on server)

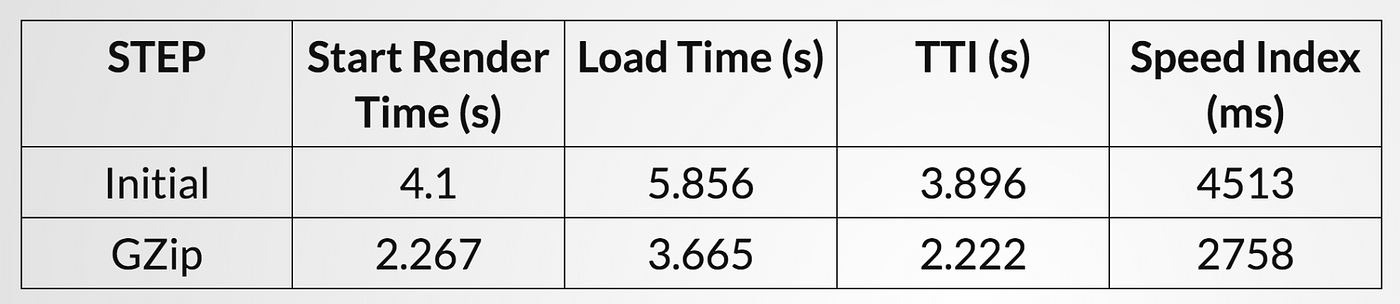

Gzip compression reduces the size of the files from the server to the browser by about 70%. Since this is a Node application, all I had to do was to include the compression middleware and, voila! The below table shows the differences in loading times after this step was performed.

Webpagetest results — The gains on such a small effort

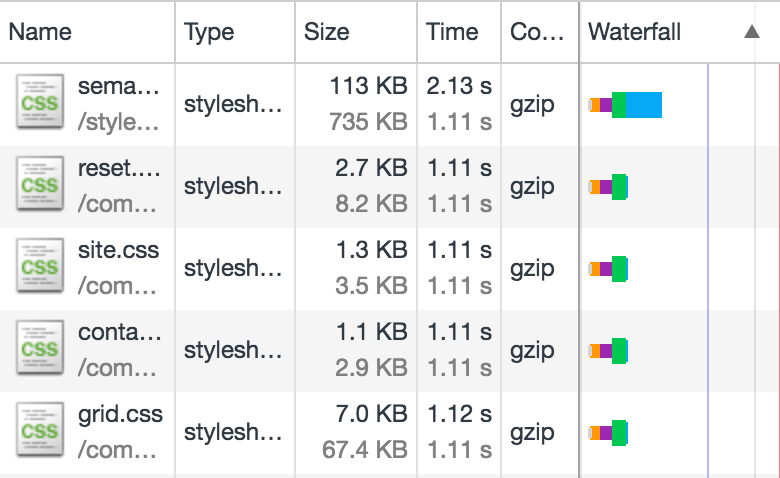

This image shows, in the Size column, the original size(below) and the compressed size(above).

The new kid on the block for compression is Brotli, which promises better compression ratios.

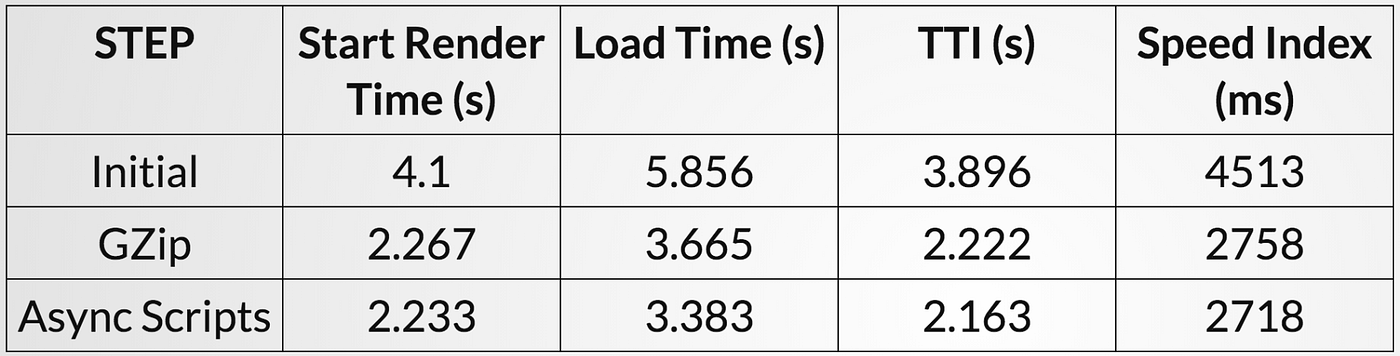

Step — 2: Asynchronous loading of resources (on client)

Anything not required immediately for user action needs to be loaded asynchronously, so that main thread is free to process critical resources. In our case, we have a map displayed only after a user action(navigating to a different route), so google maps script could be downloaded asynchronously.

<script src=”https://maps.googleapis.com/maps/api/js?&key=xyz” async></script>

Step — 3: Cache static resources (on server)

Caching helps in improving repeat view loading times. This is done by setting the max-age header in the asset response, so that the subsequent requests to the assets are served from the browser cache for the same user.

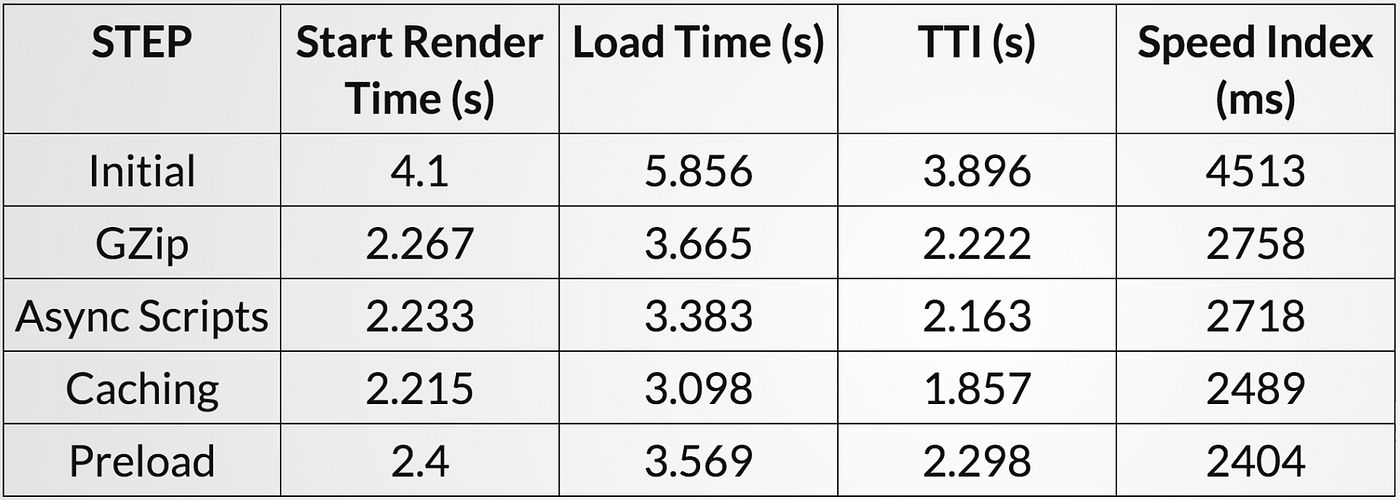

Step — 4: Preloading resources of priority (on client)

This is currently fully supported only on Chrome, but doesn’t impact the perf on other browsers, until they catch up. Here, I tried preloading the huge hero image, so that the visually complete time for the user is perceived to be shorter, but then from the result below, this seems to negatively impact the performance, so the measuring phase is very important to validate our theories on improving performance.

Preload: Fetch resources with high priority immediately, while the HTML is being parsed, as soon as the tag is encountered

Prefetch: Fetch resources needed for further navigation with lower priority(subsequent routes), in the background

DNS-prefetch: Perform DNS lookup to a URL in the background

Preconnect: Setup connections to a URL before requests are triggered

<link rel=”preload” as=”font” type=”font/woff” href=”/fonts/font.woff2”>

Step — 5: HTTP/2 (on server)

A key performance bottleneck in HTTP/1.1 was the latency and the limited number of simultaneous TCP connections per host (typically 6), while on HTTP/2, a single TCP connection can be reused for multiple asset requests. The resulting improvement should be pretty obvious from the below images.

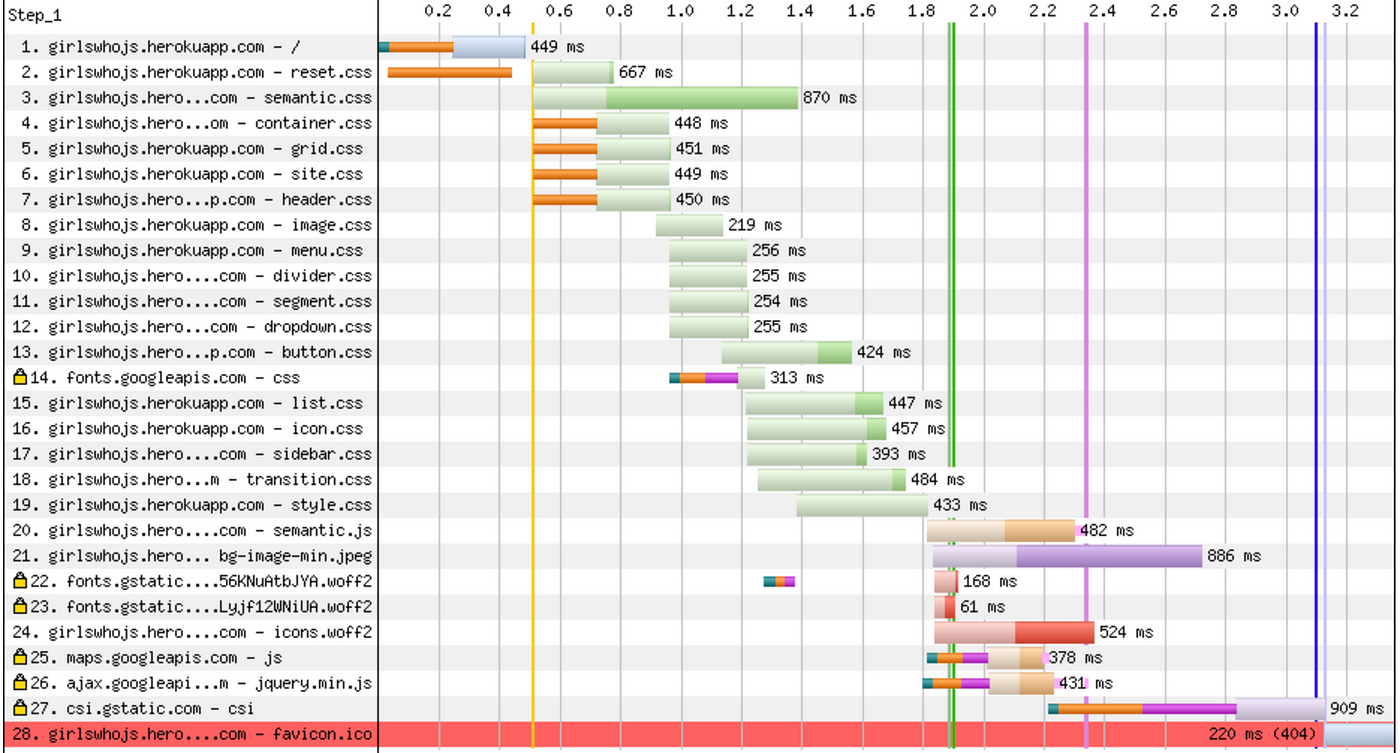

Waterfall — Before HTTP/2 implementation

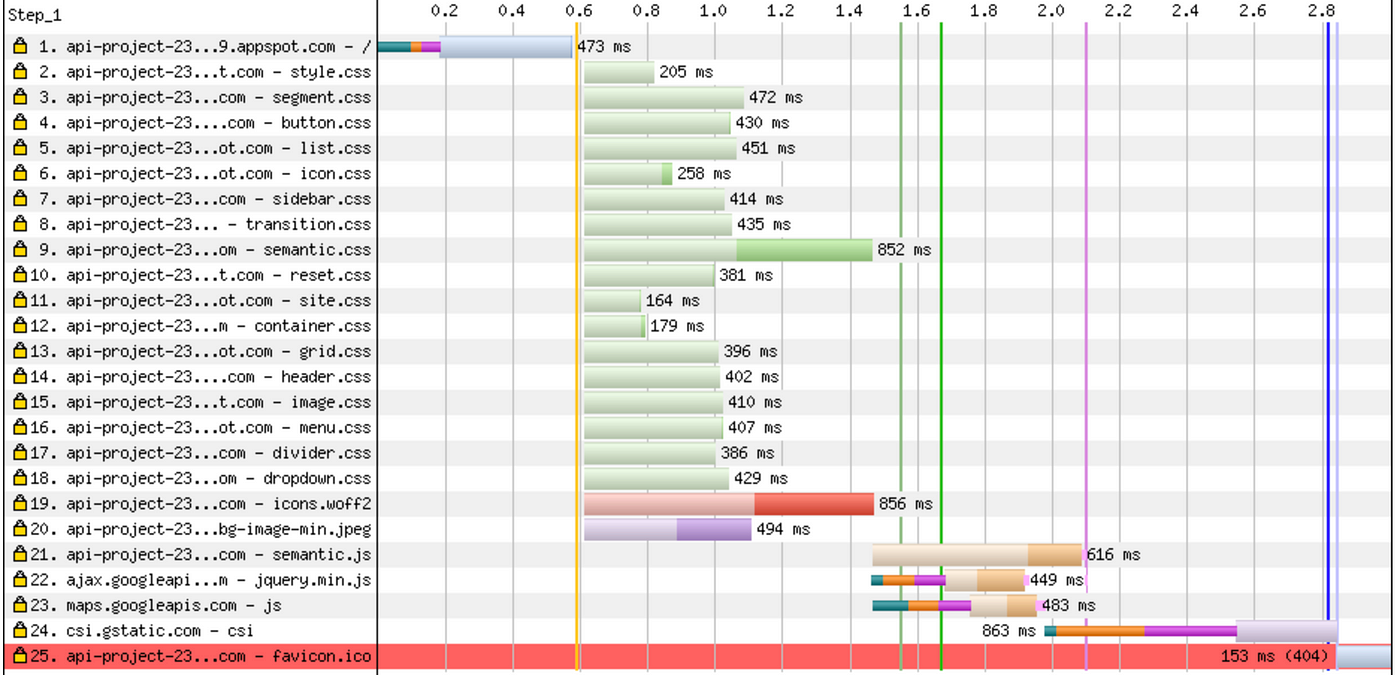

Waterfall — After HTTP/2 implementation

Though browser support is still not 100%, HTTP/2 pushes us to rethink some of the good practices currently adopted, like bundling, domain sharding, image sprites and inline assets.

HTTP/2 should be enabled on the server, by installing the SSL certificates and most of the browsers currently support HTTP/2.

This table summarises the journey of optimisation we just took and the gains achieved.

These slides contain in the speaker notes, the links to elaborate reports of all the Webpagetest runs above.